I’d like to know how long it takes to migrate from PostgreSQL Single Server to Flexible Server.

Deploy a Linux VM with Ultra Disk

Firstly, the best configuration to generate the dummy data and to ingest it to a source server is a Linux VM with Ultra Disk on Azure. I deployed ubuntu 20.04 on Standard D64ds v4 in Azure Japan East with 1024GB / 80,000 IOPS / 2,000MB/s of Ultra Disk as a source.

After deploying, I formatted /dev/sdc with fdisk command, made filesystem with mkfs.xfs command, and mounted the partition on /opt.

To connect PostgreSQL, I installed the postgresql-client package and so on.

$ sudo su

# apt-get update

# apt-get -y install postgresql-clientPrepare the dummy data

Wrote a simple python script to generate the dummy data.

# cat /opt/generate-dummy.py

#!/usr/bin/python3

from faker import Faker

import csv

import sys

import multiprocessing as multi

import random

def usemulti(num):

amnt = 1000000

res = [[num * amnt + i, fake.user_name(), fake.name(), random.choice(['M', 'F', 'N']), fake.address(), fake.email(), fake.date_of_birth()] for i in range(1, amnt+1)]

with open('./dummy{:03d}.data'.format(num), 'w') as f:

writer = csv.writer(f, quoting=csv.QUOTE_ALL)

writer.writerows(res)

cnt = int(sys.argv[1])

fake = Faker('ja_JP')

p = multi.Pool()

result = p.map(usemulti, range(cnt))

p.close()The script and another I’ll mention later require some python modules.

# pip3 install faker psycopg2And I called the script with an argument, ‘1000’ to generate 1,000 files of dummy data, about 1B of rows.

# cd /opt

# chmod 755 generate-dummy.py

# ./generate-dummy.py 1000

<< it took a few hours>>

# mkdir chunks

# mv dummy* chunks/

# du -sh chunks/

139G chunks/Deploy PostgreSQL Single Server as a source

Selected Azure Database for PostgreSQL Single Server with 32vCPU / 4096GB storage.

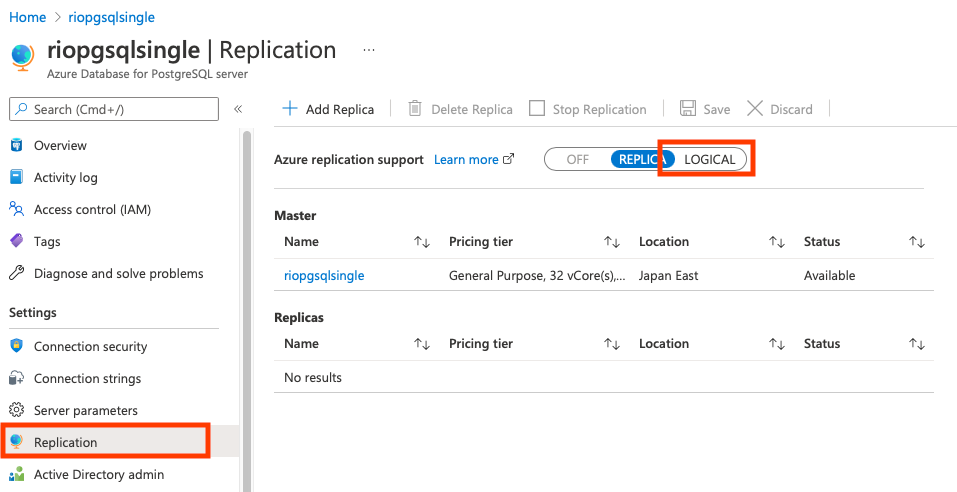

Set Logical replication

And for migration, I needed to enable logical replication for the Single Server. Clicked [Replication] and [LOGICAL].

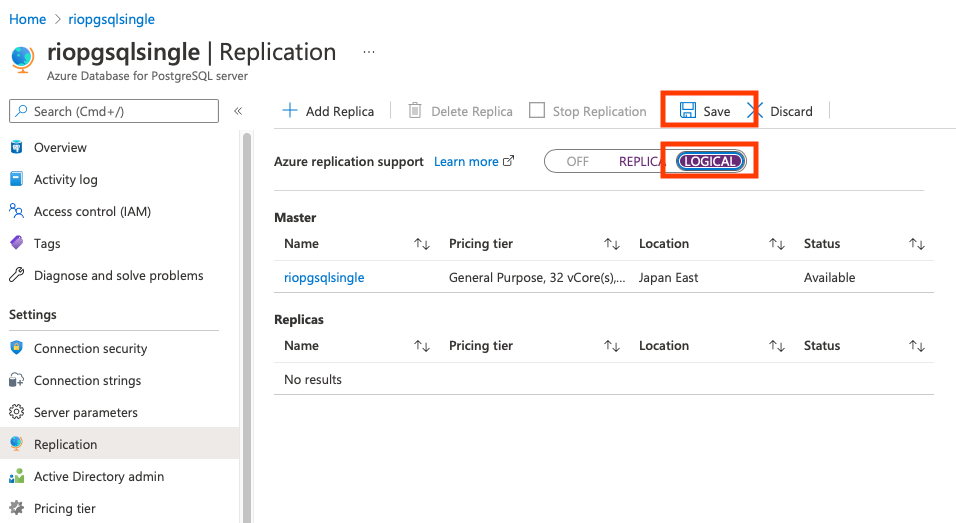

Clicked [Save].

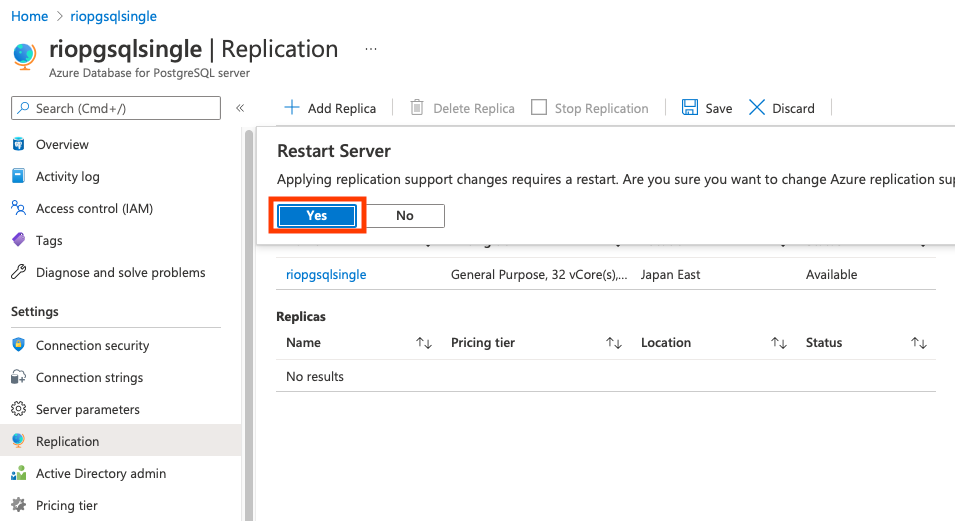

Finally, I clicked [Yes] to restart the server.

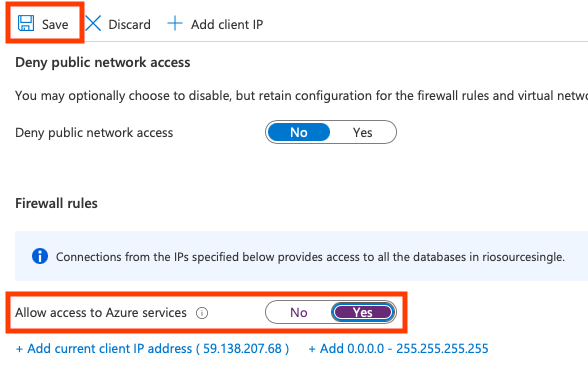

Connection security

DMS would access both Single and Flexible. I checked whether DMS could access both of them or not.

Load the dummy data

Wrote a script to ingest the dummy data because PostgreSQL 11 on Single Server didn’t permit the ingestion from stdin using psql's '\COPY' command. However, I could find the connection string for Python on Azure Portal.

Before executing the script, I exported PGPASSWORD env variables and created a table.

$ export PGPASSWORD="my password"

$ psql 'host=riosourcesingle.postgres.database.azure.com port=5432 dbname=postgres user=rifujita@riosourcesingle sslmode=require' -c \

'CREATE TABLE users (id BIGSERIAL, username TEXT, name TEXT, sex VARCHAR(1), address TEXT, email TEXT, birthdate DATE);'BTW, the script consumed a large amount of memory to accelerate bulk insert with execute_values() from psycopg2. This function seemed like a kind of prepared statement.

# cat bulk-load.py

#!/usr/bin/env python3

import os

import csv

import multiprocessing as multi

import psycopg2

from psycopg2.extras import execute_values

def ingest(num):

with psycopg2.connect('host=riosourcesingle.postgres.database.azure.com port=5432 dbname=postgres user=rifujita@riosourcesingle password={} sslmode=require'.format(os.getenv('PGPASSWORD'))) as con:

with con.cursor() as cur:

with open('/opt/chunks/dummy{:03d}.data'.format(num), 'r') as f:

reader = csv.reader(f, quoting=csv.QUOTE_ALL)

params = []

for row in reader:

params.append(tuple(row))

query = "INSERT INTO users (id, username, name, sex, address, email, birthdate) VALUES %s"

ret = execute_values(cur, query, params)

con.commit()

def main():

p = multi.Pool()

result = p.map(ingest, range(1000))

p.close()

if __name__ == "__main__":

main()Ran the script.

# chmod 755 bulk-load.py

# ./bulk-load.py

<< it took a hour or so>>Connected to PostgreSQL and checked the size of the database.

$ psql "host=riosourcesingle.postgres.database.azure.com port=5432 dbname=postgres user=rifujita@riosourcesingle sslmode=require" -c \

"SELECT pg_size_pretty(pg_database_size('postgres'));"

pg_size_pretty

----------------

148 GB

(1 row)Deploy PostgreSQL Flexible Server as a target

I Selected Azure Database for PostgreSQL Flexible Server, General Purpose, D32ds_v4, 32vCPU, 4096GiB storage as a target.

How to migrate the data?

There are a several options to migrate the data.

- Using pg_dump / pg_restore

- Using a customized app written in Python, C#, and so on

- Using Azure DMS (Data Migration Service)

I’ll introduce the way how I used Azure DMS as the followings. Because I guess you know how to use pg_dump / pg_restore and you can write a code like one above in Python.

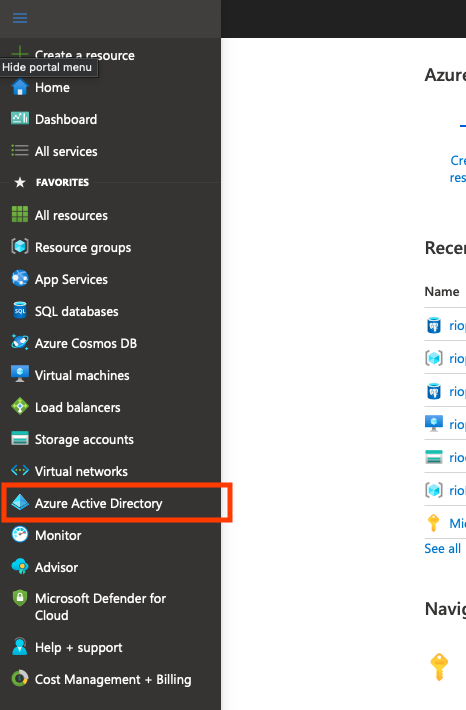

Configure Azure AD app

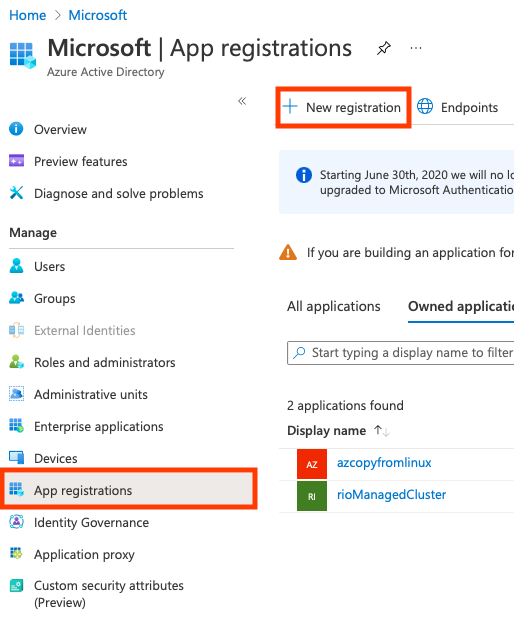

Make an AAD enterprise app

I clicked [Azure Active Directory] on the top-left menu.

And then, I clicked [App registration] and [New registration].

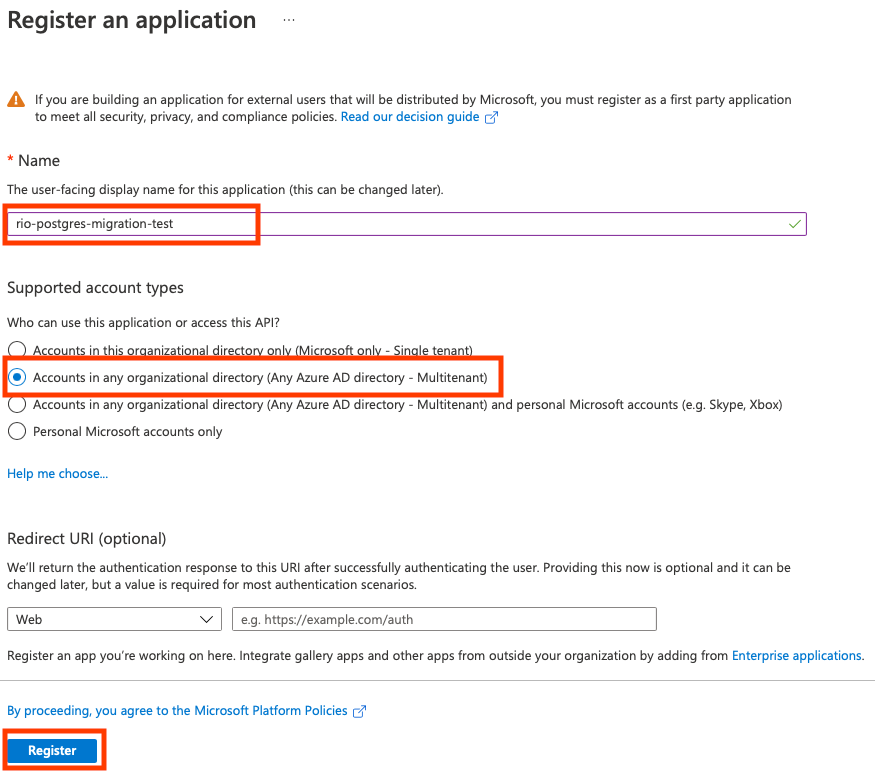

I input [the user-facing display name], e.g. “rio-postgres-migration-test” and chose [Accounts in any organizational directory (Any Azure AD directory – Multitenant)] in [Supported account types]. And then, clicked [Register].

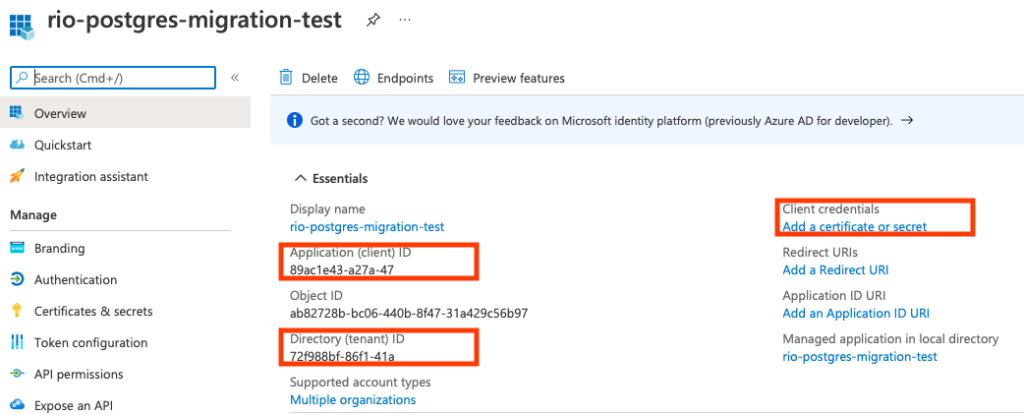

Saved the [Application (client) ID] and the [Directory (tenant) ID] for the following steps. And then, clicked [Add a certificate or secret].

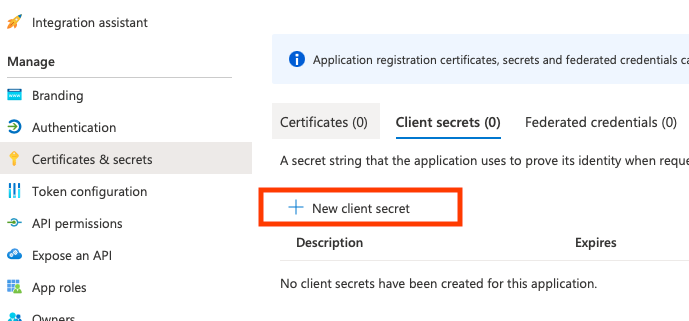

Clicked [New client secret].

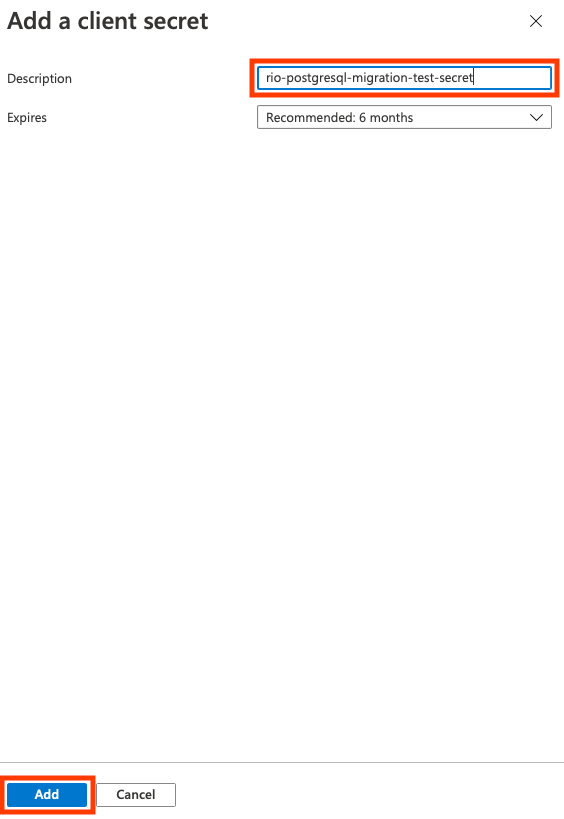

In the slide-in sheet from right edge, input [Description], e.g. “rio-postgresql-migration-test-secret” and clicked [Add].

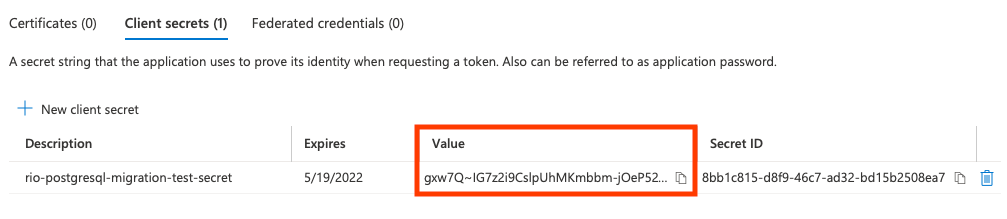

Saved the [Value] for the following steps as well as app ID and tenant ID. [Secret ID] is not required.

Add permission to the app

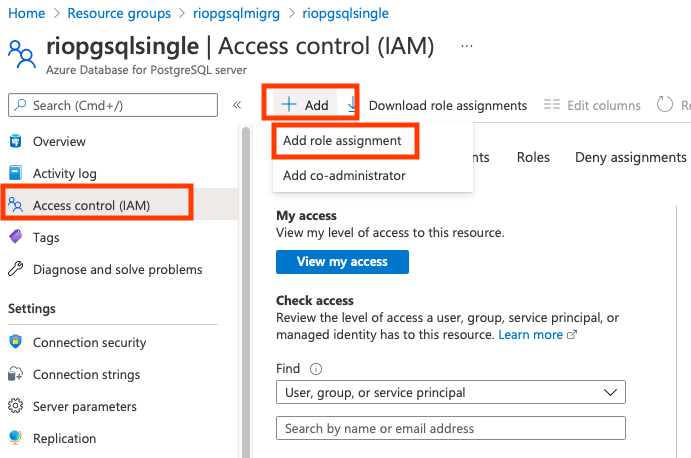

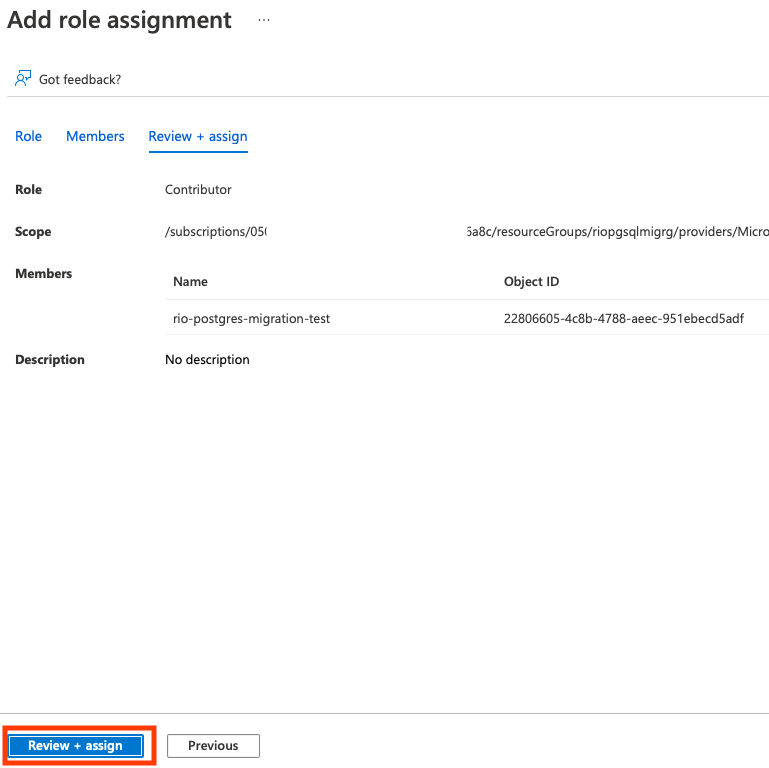

Found the source Single Server, moved to [Access control (IAM)], clicked [Add], and pointed [Add role assignment].

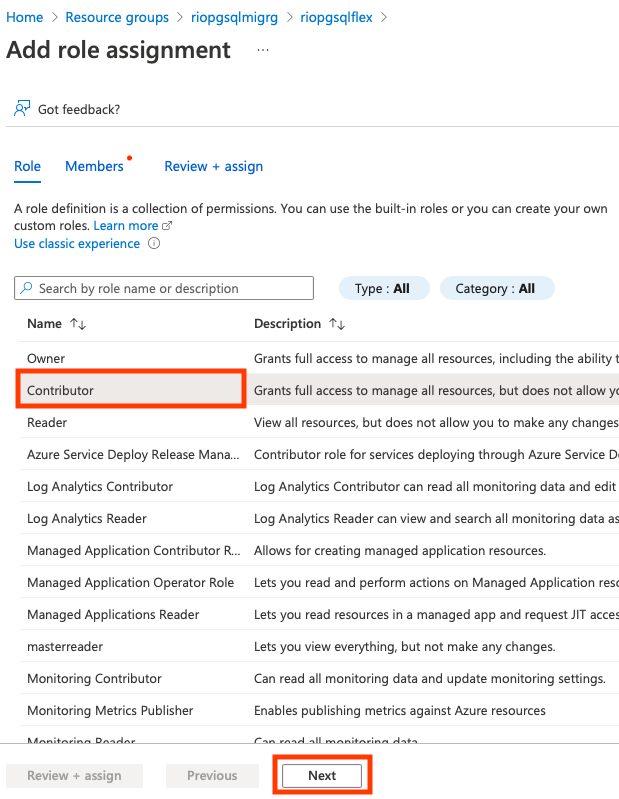

Selected [Contributor] in the Role blade and clicked [Next].

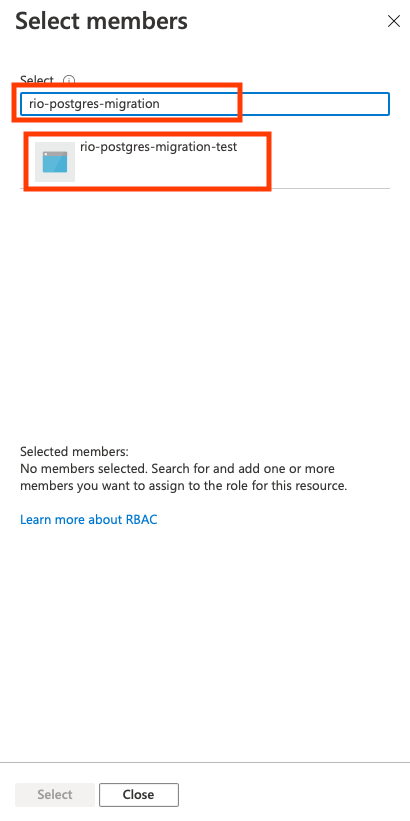

Input the name to find the app I created, and I clicked the app successfully searched.

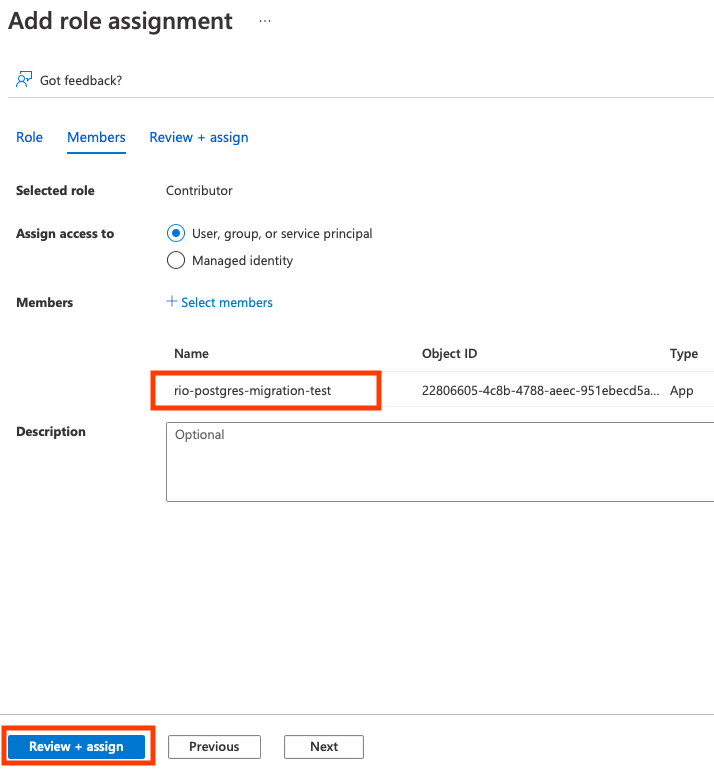

Made sure the app name existed and clicked [Review + assign].

Clicked [Review + assign] again.

Notice!!: Same steps for the Resource Group where the targeted Flexible Server has been deployed were also required. DMS would be created in the target Resource Group.

Create migrations

The latest version of Azure CLI supports migration from Single to Flexible.

$ az postgres flexible-server migration --help

Group

az postgres flexible-server migration : Manage migration workflows for PostgreSQL Flexible

Servers.

Command group 'postgres flexible-server' is in preview and under development. Reference

and support levels: https://aka.ms/CLI_refstatus

This command group is experimental and under development. Reference and support levels:

https://aka.ms/CLI_refstatus

Commands:

check-name-availability : Checks if the provided migration-name can be used.

create : Create a new migration workflow for a flexible

server.

delete : Delete a specific migration.

list : List the migrations of a flexible server.

show : Get the details of a specific migration.

update : Update a specific migration.

To search AI knowledge base for examples, use: az find "az postgres flexible-server

migration"

Please let us know how we are doing: https://aka.ms/azureclihatsIn-preview issue

I tested this flow with Azure CLI 2.30.0. And the functions related to Flexible Server migration called the 2020-02-14-privatepreview version of API. But it didn’t support some migration features.

I replaced the version 2020-02-14-privatepreview with 2021-06-01-preview in the python file, 'flexible_server_custom_common.py' in the directory, 'azure-cli/2.30.0_1/libexec/lib/python3.10/site-packages/azure/cli/command_modules/rdbms/'.

Prepare migrationBody.json for migration

Firstly, I needed to create a JSON named "migrationBody.json" from the following templates. The templates vary by the type of network where the source and the target are connected to.

This template is for public access.

// the commented out parameters are optional

{

"properties": {

"SourceDBServerResourceId": "subscriptions/<subscription id>/resourceGroups/<source rg name>/providers/Microsoft.DBforPostgreSQL/servers/<source pgsql server name>",

//"SourceDBServerFullyQualifiedDomainName": "fqdn of the source server as per the custom DNS server",

//"TargetDBServerFullyQualifiedDomainName": "fqdn of the target server as per the custom DNS server"

"SecretParameters": {

"AdminCredentials": {

"SourceServerPassword": "<password>",

"TargetServerPassword": "<password>"

},

"AADApp": {

"ClientId": "<client id>",

"TenantId": "<tenant id>",

"AadSecret": "<secret>"

}

},

//"MigrationResourceGroup": {

// "ResourceId": "subscriptions/<subscription id>/resourceGroups/<target rg name>"

//},

//"SetupLogicalReplicationOnSourceDBIfNeeded":"true",

//"OverwriteDBsInTarget":"true"

"DBsToMigrate": [

"<db name1>","<db name2>"

]

}

}And this is for the Single Server using a private end point or the Flexible Server using VNet integration.

//commented parameters are optional

{

"properties": {

"SourceDBServerResourceId": "subscriptions/<subscription id>/resourceGroups/<source rg name>/providers/Microsoft.DBforPostgreSQL/servers/<source pgsql server name>",

//"SourceDBServerFullyQualifiedDomainName": "fqdn of the source server as per the custom DNS server",

//"TargetDBServerFullyQualifiedDomainName": "fqdn of the target server as per the custom DNS server",

"SecretParameters": {

"AdminCredentials": {

"SourceServerPassword": "<password>",

"TargetServerPassword": "<password>"

},

"AADApp": {

"ClientId": "<client id>",

"TenantId": "<tenant id>",

"AadSecret": "<secret>"

}

},

"DBsToMigrate": [

"<db name1>","<db name2>"

],

"MigrationResourceGroup":

{

//"ResourceId":"subscriptions/<subscriptionid>/resourceGroups/<temp_rg_name>",

"SubnetResourceId":"/subscriptions/<subscriptionid>/resourceGroups/<rg_name>/providers/Microsoft.Network/virtualNetworks/ <Vnet_name>/subnets/<subnet_name>"

},

//"SetupLogicalReplicationOnSourceDBIfNeeded":"true",

//"OverwriteDBsInTarget":"true"

}

}Anyway, I deployed both servers with the configuration accepting public access. So, I made a JSON based on the former template.

{

"properties": {

"SourceDBServerResourceId": "subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riosourcesinglerg/providers/Microsoft.DBforPostgreSQL/servers/riosourcesingle",

"SecretParameters": {

"AdminCredentials": {

"SourceServerPassword": "Cn##########",

"TargetServerPassword": "gn##########"

},

"AADApp": {

"ClientId": "89ac1e43-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"TenantId": "72f988bf-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"AadSecret": "gxw7Q~XXXXXXXXXXXXX-jOeP52WA.4w"

}

},

"SetupLogicalReplicationOnSourceDBIfNeeded":"true",

"OverwriteDBsInTarget":"true",

"DBsToMigrate": [

"postgres"

]

}

}

Create migration

And I executed a command.

$ az postgres flexible-server migration create -n riotargetflexible -b 'migrationBody.json' -g riotargetflexiblerg

{

"id": "/subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riotargetflexiblerg/providers/Microsoft.DBforPostgreSQL/flexibleServers/riotargetflexible/migrations/128b1387-d6d5-4f2f-a58b-f765f22352e7",

"location": "Japan East",

"name": "128b1387-d6d5-4f2f-a58b-f765f22352e7",

"properties": {

"currentStatus": {

"state": "InProgress"

},

"dBsToMigrate": [

"postgres"

],

"migrationDetailsLevel": "Default",

"migrationId": "03bcafd3-ec31-430f-b99d-b6a5f17af046",

"migrationName": "128b1387-d6d5-4f2f-a58b-f765f22352e7",

"migrationWindowStartTimeInUtc": "2021-11-20T12:58:59.1944872Z",

"overwriteDBsInTarget": true,

"setupLogicalReplicationOnSourceDBIfNeeded": true,

"sourceDBServerResourceId": "subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riosourcesinglerg/providers/Microsoft.DBforPostgreSQL/servers/riosourcesingle",

"targetDBServerResourceId": "/subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riotargetflexiblerg/providers/Microsoft.DBforPostgreSQL/flexibleServers/riotargetflexible"

},

"resourceGroup": "riotargetflexiblerg",

"type": "Microsoft.DBforPostgreSQL/flexibleServers/migrations"

}I checked the migration status. The initial state was “PerformingPreRequisiteSteps”.

$ az postgres flexible-server migration list -n riotargetflexible -g riotargetflexiblerg --filter Active

{

"value": [

{

"id": "/subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riotargetflexiblerg/providers/Microsoft.DBforPostgreSQL/flexibleServers/riotargetflexible/migrations/128b1387-d6d5-4f2f-a58b-f765f22352e7",

"location": "Japan East",

"name": "128b1387-d6d5-4f2f-a58b-f765f22352e7",

"properties": {

"currentStatus": {

"currentSubStateDetails": {

"currentSubState": "PerformingPreRequisiteSteps"

},

"state": "InProgress"

},

"dBsToMigrate": [

"postgres"

],

"migrationDetailsLevel": "Full",

"migrationId": "03bcafd3-ec31-430f-b99d-b6a5f17af046",

"migrationName": "128b1387-d6d5-4f2f-a58b-f765f22352e7",

"migrationWindowStartTimeInUtc": "2021-11-20T12:58:59.1944872Z",

"overwriteDBsInTarget": true,

"setupLogicalReplicationOnSourceDBIfNeeded": true,

"sourceDBServerMetadata": {

"location": "japaneast",

"sku": {

"name": "GP_Gen5_32",

"tier": "GeneralPurpose"

},

"storageMB": 4219904,

"version": "11"

},

"sourceDBServerResourceId": "subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riosourcesinglerg/providers/Microsoft.DBforPostgreSQL/servers/riosourcesingle",

"targetDBServerMetadata": {

"location": "Japan East",

"sku": {

"name": "Standard_D32ds_v4",

"tier": "GeneralPurpose"

},

"storageMB": 4194304,

"version": "12"

},

"targetDBServerResourceId": "/subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riotargetflexiblerg/providers/Microsoft.DBforPostgreSQL/flexibleServers/riotargetflexible"

},

"resourceGroup": "riotargetflexiblerg",

"type": "Microsoft.DBforPostgreSQL/flexibleServers/migrations"

}

]

}After a several minutes, I could see the status changed to “MigratingData”.

{

"value": [

{

"id": "/subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riotargetflexiblerg/providers/Microsoft.DBforPostgreSQL/flexibleServers/riotargetflexible/migrations/128b1387-d6d5-4f2f-a58b-f765f22352e7",

"location": "Japan East",

"name": "128b1387-d6d5-4f2f-a58b-f765f22352e7",

"properties": {

"currentStatus": {

"currentSubStateDetails": {

"currentSubState": "MigratingData",

"dbDetails": {

"postgres": {

"appliedChanges": 0,

"cdcDeleteCounter": 0,

"cdcInsertCounter": 0,

"cdcUpdateCounter": 0,

"databaseName": "postgres",

"fullLoadCompletedTables": 0,

"fullLoadErroredTables": 0,

"fullLoadLoadingTables": 0,

"fullLoadQueuedTables": 1,

"incomingChanges": 0,

"initializationCompleted": false,

"latency": 0,

"migrationState": "BACKUP_IN_PROGRESS",

"startedOn": "2021-11-20T13:07:07.8347343+00:00"

}

}

},

"state": "InProgress"

},

"dBsToMigrate": [

"postgres"

],

"migrationDetailsLevel": "Full",

"migrationId": "03bcafd3-ec31-430f-b99d-b6a5f17af046",

"migrationName": "128b1387-d6d5-4f2f-a58b-f765f22352e7",

"migrationWindowStartTimeInUtc": "2021-11-20T12:58:59.1944872Z",

"overwriteDBsInTarget": true,

"setupLogicalReplicationOnSourceDBIfNeeded": true,

"sourceDBServerMetadata": {

"location": "japaneast",

"sku": {

"name": "GP_Gen5_32",

"tier": "GeneralPurpose"

},

"storageMB": 4219904,

"version": "11"

},

"sourceDBServerResourceId": "subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riosourcesinglerg/providers/Microsoft.DBforPostgreSQL/servers/riosourcesingle",

"targetDBServerMetadata": {

"location": "Japan East",

"sku": {

"name": "Standard_D32ds_v4",

"tier": "GeneralPurpose"

},

"storageMB": 4194304,

"version": "12"

},

"targetDBServerResourceId": "/subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riotargetflexiblerg/providers/Microsoft.DBforPostgreSQL/flexibleServers/riotargetflexible"

},

"resourceGroup": "riotargetflexiblerg",

"type": "Microsoft.DBforPostgreSQL/flexibleServers/migrations"

}

]

}Waited for a several hours, the status changed to “WaitingForCutoverTrigger”.

{

"value": [

{

"id": "/subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riotargetflexiblerg/providers/Microsoft.DBforPostgreSQL/flexibleServers/riotargetflexible/migrations/128b1387-d6d5-4f2f-a58b-f765f22352e7",

"location": "Japan East",

"name": "128b1387-d6d5-4f2f-a58b-f765f22352e7",

"properties": {

"currentStatus": {

"currentSubStateDetails": {

"currentSubState": "WaitingForCutoverTrigger",

"dbDetails": {

"postgres": {

"appliedChanges": 0,

"cdcDeleteCounter": 0,

"cdcInsertCounter": 0,

"cdcUpdateCounter": 0,

"databaseName": "postgres",

"fullLoadCompletedTables": 1,

"fullLoadErroredTables": 0,

"fullLoadLoadingTables": 0,

"fullLoadQueuedTables": 0,

"incomingChanges": 0,

"initializationCompleted": true,

"latency": 0,

"migrationState": "READY_TO_COMPLETE",

"startedOn": "2021-11-20T13:07:07.8347343+00:00"

}

}

},

"state": "WaitingForUserAction"

},

"dBsToMigrate": [

"postgres"

],

"migrationDetailsLevel": "Full",

"migrationId": "03bcafd3-ec31-430f-b99d-b6a5f17af046",

"migrationName": "128b1387-d6d5-4f2f-a58b-f765f22352e7",

"migrationWindowStartTimeInUtc": "2021-11-20T12:58:59.1944872Z",

"overwriteDBsInTarget": true,

"setupLogicalReplicationOnSourceDBIfNeeded": true,

"sourceDBServerMetadata": {

"location": "japaneast",

"sku": {

"name": "GP_Gen5_32",

"tier": "GeneralPurpose"

},

"storageMB": 4219904,

"version": "11"

},

"sourceDBServerResourceId": "subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riosourcesinglerg/providers/Microsoft.DBforPostgreSQL/servers/riosourcesingle",

"targetDBServerMetadata": {

"location": "Japan East",

"sku": {

"name": "Standard_D32ds_v4",

"tier": "GeneralPurpose"

},

"storageMB": 4194304,

"version": "12"

},

"targetDBServerResourceId": "/subscriptions/050e5060-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/riotargetflexiblerg/providers/Microsoft.DBforPostgreSQL/flexibleServers/riotargetflexible"

},

"resourceGroup": "riotargetflexiblerg",

"type": "Microsoft.DBforPostgreSQL/flexibleServers/migrations"

}

]

}Finally, I executed ‘cutover’ command for the migration.

$ az postgres flexible-server migration update -n riotargetflexible -g riotargetflexiblerg --migration-name 128b1387-d6d5-4f2f-a58b-f765f22352e7 --cutoverChecked whether the database was successfully migrated to target Flexible Server or not. It worked.

$ psql "host=riotargetflexible.postgres.database.azure.com port=5432 dbname=postgres user=rifujita sslmode=require" -c "SELECT pg_size_pretty(pg_database_size('postgres'));"

pg_size_pretty

----------------

148 GB

(1 row)How long did it take?

In this case, it took about 7 hours to migrate for 148GB of data. If you require the shorter window to migrate, customized DMS to meet your requirements is strongly recommended.

| Events | Start | duration |

| PerformingPreRequisiteSteps | 21:58:59 | 00:08:05 |

| MigratingData | 22:07:04 | 07:03:03 |

| WaitingForCutoverTrigger | 05:10:07 | |

| Total Duration | 07:11:08 |